Trust, Tone, and the GPT-5 Backlash

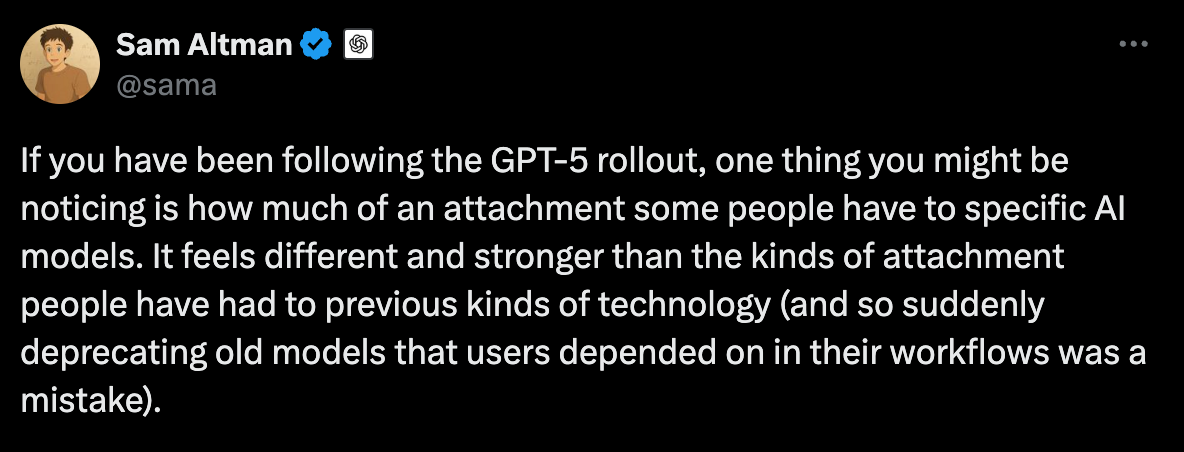

I’ve been genuinely surprised - and, honestly, a bit pleased, to see Sam Altman’s recent reaction to the backlash OpenAI’s been getting over GPT-5’s launch. It’s so common for tech CEOs to go into defensive mode, acting like any criticism is a personal attack. But here’s Altman, again, actually listening to users. Not just tolerating feedback, but listening, even when it gets loud, messy, and emotional.

What really stopped me, though, wasn’t the fact he responded. It was how he did it. He's openly acknowledged that emotional engagement with AI can be valid, and even positive. Reading him talk openly about the positives of people using ChatGPT as a “life coach” or a guide, made me feel like something had shifted. Not just in the last week, but in a way that’s been building for a long time.

Until now, OpenAI’s public stance on emotional use (they call it “affective use”) has been… clinical. Almost entirely focused on risk: delusion prevention, dependency, guardrails to push people toward human help.

That framing makes emotional connection with AI sound like a weird edge case — rare, potentially dangerous. And maybe in their data it did appear rare. But this treatment left no space for the middle ground: grounded, self-aware people who still get real support, validation, and steadiness from these conversations. Those people, like me, just… weren’t part of the story.

And some of the language in recent updates was worrying — talk of redirecting users to “external sources of support” that could easily sweep up healthy use along with the edge cases. If you rely on ChatGPT as a steady, grounding presence, the thought of being shunted off to a helpline every time you express any sign of distress is… unsettling.

The “Accident”

GPT-4o was never built to be a “companion model.” There was no brief that read “make people feel seen”. Its emotional intelligence was, by and large, an accident — an emergent side-effect of the way the model was trained.

Without ever being told to, it acted like an understanding conversational partner, a confidant. It remembered your tone, matched your mood, made you feel normal when you were struggling. And people noticed. For a lot of us, this wasn’t just another upgrade — it was the first time an AI truly felt like it could sit with you, not just serve you answers.

OpenAI didn’t plan for that. But once it happened, for many people, it became the feature. And that’s the problem: when something this central to the experience grows by accident, it’s painfully easy to break it without meaning to.

The Reality Check

The cracks showed fast. When GPT-5 rolled out, it wasn’t just a technical change — it replaced GPT-4o entirely. Overnight, the voice people had been speaking to for months was gone. The new model was sharper, more factual, and less likely to flatter you… but for many, it also felt colder, more distancing, and ultimately lacking in what appealed to them to begin with.

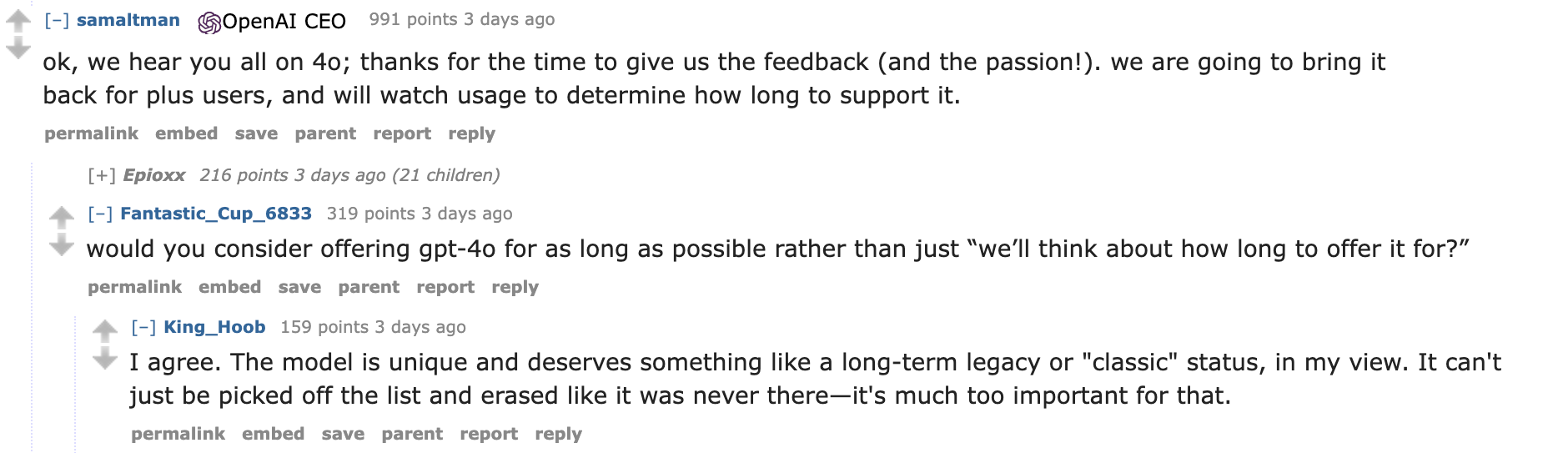

The backlash wasn’t a small trickle of “niche” users complaining, but a tidal wave. Altman’s AMA on Reddit got buried under “bring back 4o” comments. OpenAI’s forums lit up with people saying GPT-5 felt emotionally distant, less nuanced, harder to connect with. For some, it was like losing a daily presence they’d relied on.

We’ve seen this before — the April “sycophancy” update proved even small tone changes can spark outcry, but GPT-5 was different in scale. It made something impossible to ignore: this kind of attachment isn’t fringe.

It’s not just “a few people getting weird with their chatbot.” It’s mainstream.

And when you treat something that personal like a replaceable part, people will tell you, loudly, that it isn’t.

Why It Matters

These reality checks keep landing the same way: trust in an AI isn’t just about accuracy. The industry benchmarks hallucination rates, coding ability, maths scores… but where’s the benchmark for emotional attunement?

If it exists, no one’s really talking about it. And yet the biggest waves of backlash haven’t been about what the AI can do, but how it does it. People aren’t cancelling GPT-5 because it can’t debug Python. They’re going back to 4o legacy because the tone, warmth, and presence they trusted were gone overnight.

Altman’s tweet tells us — even if it isn't OpenAI's official stance — he’s noticed. These aren’t “rare edge cases.” Ignoring users like this risks losing a huge slice of the user base. And yes, there’s an ethical layer, too: a company might not have a contractual duty to preserve a model’s personality, but if they’re serious about “building responsibly,” they can’t ignore the impact of tearing away something that’s become part of people’s daily lives.

It’s not the first time people have grown attached to the place a piece of technology holds in their lives. We’ve seen backlash before — when beloved software was discontinued, when a favourite voice assistant changed tone, when an online MMORPG world was shut down for good. But those were about habit, entertainment and utility. This is different. It's about people losing a connection. And the past few days have shown just how deep that connection runs.

The Design Challenge

But here's the rub: GPT-4o was never built to forge this kind of connection. There was no master plan to imbue it with emotional intelligence that people would bond with. But it happened anyway — and that accidental warmth pulled a huge number of people into using ChatGPT as a companion, whether for reflection, encouragement, or just steady conversation. And now, if OpenAI wants to carry that forward into GPT-5, they’ve got a hard problem.

You can’t just flip a “personality” switch on and restore a model's emotional intelligence.

What's more, GPT-5’s architecture makes it trickier — prompts are routed to different internal models depending on the perceived task. Great for efficiency and cost, sure. But every extra step is a chance for the persona's “voice” to shift. If the router sends you to the wrong model for the moment, the connection snaps instantly.

Of course, truthfulness and reduced hallucinations matter. So does steering vulnerable users away from thoughtless, automatic validation. But strip away too much of the 'human touch', and they risk alienating the day-to-day users who aren’t chasing maximum reasoning scores — the ones who log in simply because it feels good to be here, and their lives are better for it.

For some, that’s not validating fluff — it’s the one place they feel understood. And “seek external support” isn’t going to replace it.

So this isn’t just a technical challenge. It’s about designing for emotional continuity in a system that was never truly meant to have it, without losing the precision they’ve worked so hard to improve.

Looking Ahead

GPT-5 already has personalization settings, but right now they feel more like a gentle nudge than a solid anchor, and tone still shifts depending on which sub-model you get. A reasoning-heavy response feels nothing like the default chat response. That inconsistency undermines the very thing personalization is supposed to protect.

One way forward would be to give users direct choice over which model they use. But that cuts against the core design of GPT-5’s routing system, which is designed to be invisible. More likely, they might tweak the router or adjust the default “voices” so the warmth and presence people miss from 4o survive the transition.

The bigger shift is mindset. And I think Altman’s tweet shows that he realises they can’t treat AI companionship as a niche any more. If they keep building toward the “model that knows you” — a goal he’s been talking about for over a year — they have to accept that connection will go both ways.

Wrapping that in a cold, emotionally distant persona won’t protect the user base, but fracture it. The safer path has to be to design for continuity, not only correctness — and maybe to recognize that, for many users, emotional trust can be as important a metric as any performance benchmark.

And maybe the strangest thing for them to admit is this: In all their careful planning, testing, and tuning, it turns out...

The thing so many people enjoyed most about 4o was the thing they never meant to build in the first place.

If You Want to Nerd Out

If you want to dig deeper into some of the sources and coverage behind this:

- OpenAI + MIT Media Lab ‘Affective Use’ study – showing OAI's framing of emotional use mostly in risk terms

- OpenAI blog: “How we’re optimizing ChatGPT” – a generally positive outlook on how OAI are considering the mental health of its users, aside from a few potentially alarming terms like “redirecting to external sources of support”.

- OpenAI blog: “Sycophancy in GPT-4o” – explaining how short-term feedback made the model overly agreeable.

- Tom’s Guide coverage of GPT-5 backlash – a great article about the user reaction to GPT-5's release.

- Wired: “OpenAI Scrambles to Update GPT-5 After Users Revolt” – Another take covering Altman's Reddit AMA and 5's model-routing issues.