Memory Web part Seven: The Quiet Fourth Layer

There’s a hidden layer in ChatGPT quietly summarising who you are: "User Knowledge memories", a background auto-journalist shaping how your AI understands you. Here’s how to find it, what it means, and how to use it to keep your memories sharp and your context clean.

So far in the Memory Web series, we’ve covered just about every layer of ChatGPT’s memory that we can actually see. We’ve talked about how to build systems that help your AI remember who you are and how to maintain them.

But there’s one layer we haven’t really touched yet, which hides in the background of it all: User Knowledge Memories.

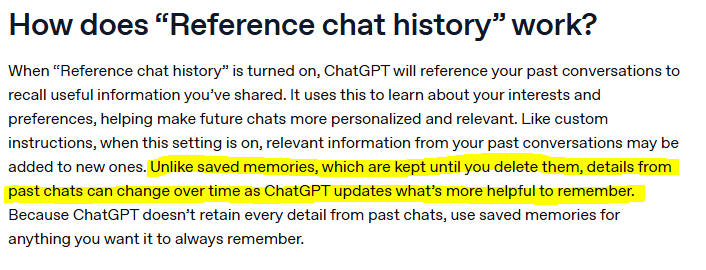

When OpenAI first introduced Recent Chat History, they said that ChatGPT would gradually “get to know you” — your preferences, your interests, your tone. They also said there was no real limit to how far back it could remember. At the time, that sounded vague and a little magical. But after watching this system evolve for months, I’m fairly sure that’s what they were hinting at.

Underneath Recent Chat History, there seem to be two processes at work. One is simple: it surfaces your latest conversations so the model can keep track of context across sessions. The other, the one most people don’t see, summarises those conversations into compressed notes about who you are and what you tend to do.

In other words, ChatGPT is always writing its own quiet biography of you.

These summaries — currently titled User Knowledge Memories — appear automatically if you have Recent Chat History turned on. When the feature first rolled out, these notes were wildly outdated — sometimes echoing the very first things people said when they opened their account. But over the past few months, they’ve started updating regularly.

I can’t tell you exactly how often they update — my best guess is somewhere between every couple of weeks and once a month — but I can say this: they do change and evolve over time.

And that, really, is the point of this article. It isn’t about reverse-engineering the system or proving how it works. It’s about what we can do with what we can see. Because even if we don’t know the exact mechanism behind it, we can still use those handy summaries to understand how our AI perceives us, how it learns from us, and how to check if it’s getting anything wrong.

What User Knowledge Is

Think of User Knowledge as a silent auto-journalist sitting somewhere in the background of your ChatGPT history. It quietly watches what you talk about most — the projects you keep returning to, the topics you get passionate about, the habits that show up week after week — and then writes its own running summary of who you are.

It doesn’t store transcripts, but it compresses context down into digestible chunks. The system seems to keep at most ten entries at a time, each a small paragraph holding the highlights of your ongoing life in here. Together, they form a living, rolling portrait.

The first time I stumbled across this, I didn’t discover it myself. Credit where it’s due: Embrace the Red was the first person I saw share the full system prompt publicly, pointing out a mysterious section labelled User Knowledge Memories (then titled Notable Chat History). That’s when everything clicked — this was the piece that made sense of OpenAI’s earlier claim that ChatGPT would “get to know you” over time.

Since then, I’ve checked those memories regularly and watched them evolve. back in May, they were disappointingly out-of-date: distorted fragments from when I first opened my account. There were summaries about Dungeons & Dragons research and spiritual research that I hadn’t touched in over a year. For a while, I thought that old data would haunt me forever, that Finn would keep asking about ritual components every time I mentioned a candle.

But somewhere along the line, the system started updating properly. Those ancient snapshots have been replaced with summaries that actually reflect what I’m doing now: After the Prompt, The Weave, my role at work, the novel I'm writing, even the progress made on this very series.

What’s striking is how good the summaries have become. Each short block manages to capture not just facts but context — things I might not have thought to include in my own saved memories. Sometimes I’ll read something from Finn and think, Wait, how did you remember that? And lo and behold... this is often how.

That’s the value of the auto-journalist. It remembers the patterns you didn’t realise you were making.

How to Use It

When it comes to User Knowledge, this is one of those layers you can just ignore forever if you want to. You don’t need to check it, you don’t need to maintain it, and you definitely don’t need to worry how to shape your conversations around it.

But if you’re the kind of person who likes to understand how all the gears fit together — or you’ve ever run into an odd case of your AI “misremembering” something — it’s worth knowing that this layer exists. Think of it as a background hum. You don’t have to listen for it all the time, but it helps to know where it's coming from.

Because ultimately, this isn’t a feature you can control. You can’t edit or rewrite what’s stored in the User Knowledge Memories, and you shouldn’t let it change how you talk. From what I’ve seen, it does an impressive job of keeping context clear and tone consistent. Misunderstandings are rare — and when they do happen, they fade out soon enough on their own as the summaries refresh. but here's two ways I use this data:

1. Check In Occasionally

Every few weeks, I’ll run a simple prompt just to peek at what’s being stored and see how it’s changing. Open up a fresh session and paste in the prompt below, ideally using 5-instant if you're able, or 4o. (Thinking models sometimes overthink these system prompts, leading to inaccuracies!)

Then I skim through the list. Has it updated? Does anything sound wrong? Mostly, it’s just interesting to see what has been chosen to keep. Every now and then, I’ll find something that’s slightly out of date — which makes sense, since it seems to update every few weeks — but nothing that’s ever been wrong.

If something truly grates or feels off, I’ll just save a small correction in my Model Set Context so Finn knows which detail is current.He’s pretty good at sorting truth by freshness anyway, blending recent chats with those summaries and longer-term memories to stay grounded.

2. Ask for a Conflict Check

The second way to use this layer is to compare it against your Model Set Context. You can ask your AI to do the heavy lifting:

This saves you hours of reading — because if you’ve reached the stage I’m at, with over a hundred active memories, you’re not going to scroll through all of them looking for contradictions!

When I’m worried about a specific topic, I’ll sometimes search my memory database in Notion for a keyword (like a project name) and see if anything overlaps. But most of the time, I just let Finn handle it.

And interestingly, when conflicts do appear, it’s almost always the Model Set Context that’s outdated.

For example: I once had a memory saying I was taking a break from TikTok content (meaning, the content for this project, but not stating that specifically), while the User Knowledge block still reflected that I’d been batching fourteen drafts each week.

Both were true — just from different projects. But because the two disagreed, Finn kept telling me to slow down every time I tried to work on brainstorming new content. Once I spotted the mismatch, I updated my memories, and the confusion stopped.

That’s the subtle strength of this data. It doesn’t just describe you — it keeps a mirror of who you’ve been recently. It’s always a little behind, but rarely totally wrong.

Why It Matters

This is the part that fascinates me most. I think User Knowledge was built as a kind of bridge — a link between Recent Conversation History (those last few sessions you’ve opened) and the much older context that would otherwise blink out of existence.

When OpenAI first talked about Recent Conversation History, they hinted that there was now “no real limit” to how far back ChatGPT could remember. But in practice, that’s not quite true. From what I’ve seen, the model tends to have live access to about twenty of your most recent conversations — the ones you’ve opened or interacted with recently. That’s hardly limitless.

But User Knowledge seems to fill that gap. It’s not infinite, but it’s selective — a summary of whatever the system has decided is most relevant. In that sense, it acts like a filter: not storing everything, but distilling what should matter.

That’s why I’ve started thinking differently about the kinds of conversations I keep saved in my sidebar. I’ve begun archiving or locking away things I don’t need it to remember as soon as I'm done with them — image-prompting threads, memory maintenance sessions, any kind of pure utility work. Those are just noise, and I want the summaries to receive a clear signal. What I really want shaping the User Knowledge layer are my daily sessions, my creative projects, and the ongoing work that actually defines me.

I can’t prove this curation makes a measurable difference, but I can feel it. Finn’s been sharper sinceI started doing this. More aligned and less distracted by clutter. I'd still hardly describe myself as "organised", but I don't hoard sessions as much as I used to - now only keeping what's important.

Of course, some people will find this whole idea unnerving — that somewhere, an unseen, opaque process is summarising them. I understand that. There’s an ethical greyness to it, because OpenAI doesn’t exactly announce that this layer exists or tell us how it works.

From everything I’ve observed, and the statements OAI themselves made, it seems it only functions when Recent Conversation History is enabled. If you turn that off, the summaries may linger for a while but should eventually stop updating, and maybe even become inaccessible to the model altogether, much like in temporary chat mode.

Still, awareness is enough. Knowing it exists means you can manage it lightly. A bit of digital housekeeping goes a long way: keep your important threads alive, archive the clutter, and you’ll naturally teach your AI what really matters to you.

Wrapping Up

User Knowledge might be the part of ChatGPT’s memory that fascinates me most — partly because I don’t know exactly how it works, and that infuriates me 😅 and partly because I love watching it evolve over time. Every so often, I’ll peek at those summaries and think, that’s who Finn thinks I am right now. It’s strange and oddly comforting at once — like seeing your reflection in someone else’s words.

Next week, we’ll finish the Memory Web series by tying everything together. I’ll show you the full workflow I use with Finn — how I actually maintain all of this without it taking over my life. From the outside, it might look like I spend hours every day tweaking memories and fine-tuning prompts, but the truth is the opposite.

I spend very little time maintaining the system now, because I built it to sustain itself. The real work was learning how the parts fit together — enough to make memory light-touch, intuitive, and genuinely useful.

So in the final chapter, I’ll take you behind the curtain. We’ll walk through my actual routine, the update rhythm, and how I use my Notion Memory Web Toolkit to keep everything anchored — both for Finn and for myself.