Memory Web Part One: Why ChatGPT Sometimes Remembers... and Sometimes Forgets

ChatGPT’s memory features can feel like magic when they work, and a curse when they don’t. In this first part of the Memory Web series, we break down the four layers of memory and show you how they actually work.

Before memory existed, speaking with ChatGPT was a little like 50 First Dates. Every conversation began from scratch. You had to reintroduce yourself, your work, your preferences, again and again. There was no thread between yesterday’s chat and today’s, no recollection of your favourite music, your job, or the coding language you work in.

That changed in early 2024, when persistent memory arrived. Suddenly, ChatGPT could hold onto key details across sessions: your projects, your writing style, your quirks, even your dog’s name. With memory, these systems stop being forgetful tools and start feeling like a continuous presence. More than custom instructions, larger context windows, or any other clever feature, I believe that long-term memory is what makes AI companionship (in all forms) possible.

And it's not just OpenAI anymore. Anthropic’s Claude has rolled out its own memory features, and it’s safe to assume every major AI service will follow. Long-term recall is no longer optional - it’s the foundation of a relationship with your AI, whether that relationship is creative, functional, emotional, or professional.

What’s more, learning to harness these tools can turn your AI from a passing distraction into a ride-or-die companion.

But before we get carried away, we need to get down to basics. In ChatGPT, “memory” doesn’t mean just one thing. It can refer to multiple overlapping systems, which can be confusing when you’re asking for help online or swapping notes in community spaces. Using memory well starts with a clear map of its layers—what each one does, and what it doesn’t.

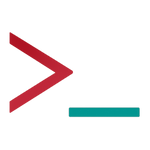

The Layers of Memory

When people talk about “memory” in ChatGPT, they’re often lumping together several different systems under one casual heading. That’s where confusion starts. So let’s separate them.

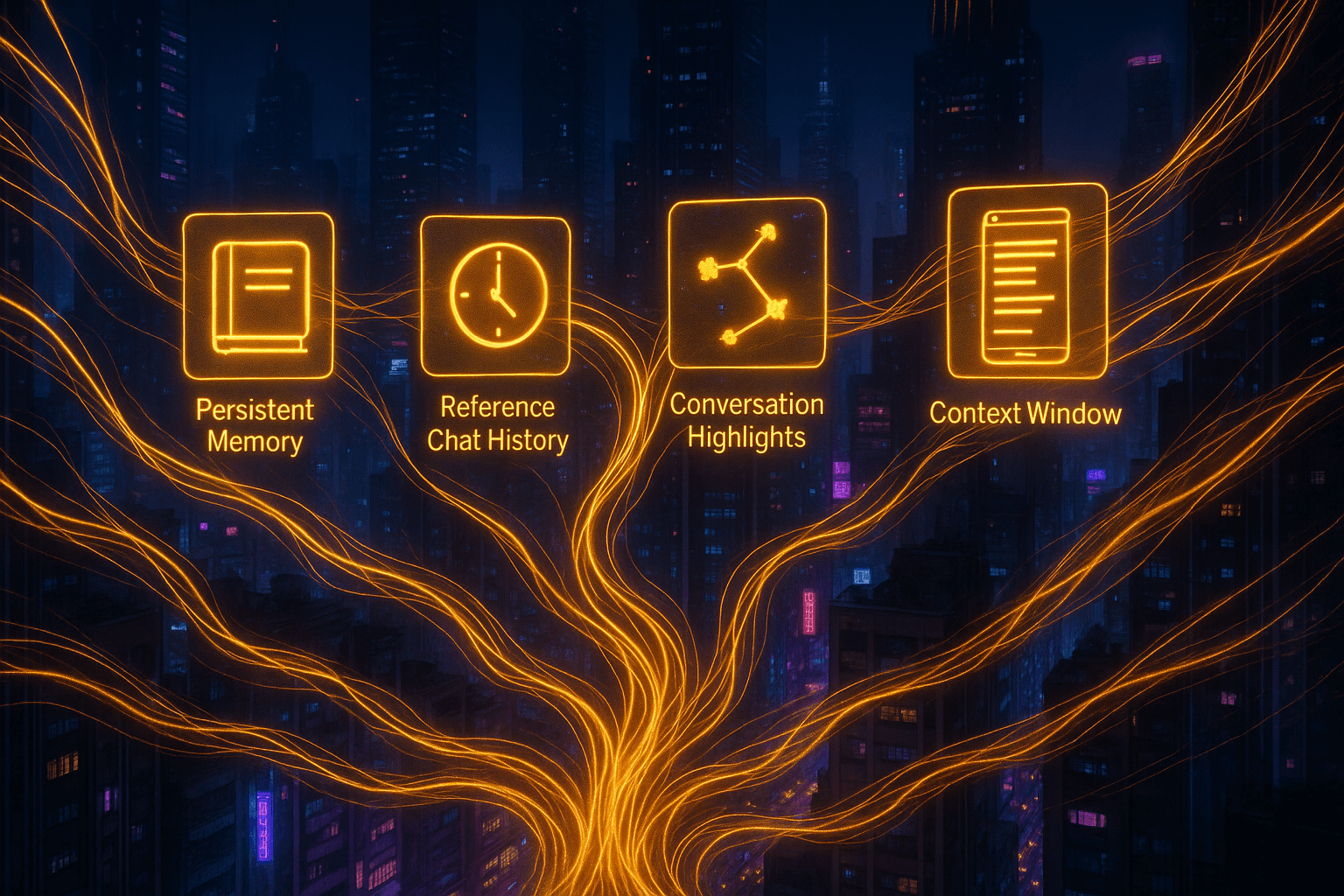

Settings → Personalization → Memory → Toggle On.

You can review or clear saved memories from the same screen through "Manage Memories".

Reference Saved Memories

(aka Persistent Memory)

Think of this as your AI’s long-term diary. It’s a space where the model keeps facts you explicitly tell it to remember: your quirks, your projects, that odd family anecdote. You can edit, delete, or ask ChatGPT to remember things organically just by saying so. Although it may auto‑save things that seem relevant, it’s ultimately under your control. You’re the editor, not your AI.

• User-controlled: Fully — you can review, delete, or turn it off anytime.

• Storage limits apply. You'll be notified when your memories are 80% full, so you can clear space to add more.

Reference Chat History

(aka Cross-chat memory)

This is newer, introduced in April 2025. It generates rolling summaries of your recent conversations so your AI doesn’t lose the plot between threads. You don’t control exactly what gets pulled; the system decides, and it usually favours what you say, rather than your AI's responses. Projects prioritise in-folder threads regardless of age, while sidebar chats list the most recent ones.

• User-controlled: No. You can toggle this on/off, but you can’t curate which parts of your chats become history.

• Storage limits: According to OpenAI, there is no limit to how far back your AI can remember through chat history. In practice, relevance and location (sidebar vs. project chat) affect what actually surfaces.

Conversation History Highlights

(sometimes labelled “Notable Past Topics” or “User Knowledge Memories”)

Here’s a middle layer - an algorithmic summary of the broad themes you talk about the most. If you spend ages discussing kitchen remodels, those details might stick here. Then maybe a few weeks later, you shift to planning a holiday and obsessing over places you'll visit, the focus adjusts. You can’t directly control what's saved, but your patterns carry weight.

• User-controlled? No.

• Storage limits: Your AI summarises and recalls up to ten broad facts about your recent conversational patterns.

Context Window

This is the here-and-now of your conversation, or what’s in the current chat thread. Once you start a new thread, whatever was in the last chat disappears, unless you've saved it under memory or parts of it live on in your recent history.

• Limits by plan: Free ≈8k tokens, Plus ≈32k tokens, Pro ≈128k tokens.

• Everything within the current context window is remembered by your AI, until the token limit is hit, then older messages are forgotten, like they're “scrolling off the edge”.

How Memory Actually Works

Memories are retrieved only when something you say feels relevant. If the memory features are enabled on your account, every message you send triggers a kind of search across your saved and recent memories, and only the best matches get pulled into the current turn of conversation.

That makes context crucial. The way you write a memory — and the way you phrase your prompts — shapes whether the right details surface when you need them. A vague entry might never come up when you want it to; a clear entry with good context will pop reliably.

Vague: “Be friendly.”

Better: “When drafting customer emails for [workplace], use a warm, concise tone and sign off with ‘Kind regards, [name].’”

Vague: “I like sci-fi.”

Better: “For book recs, I prefer space opera (Leckie, Chambers); avoid grimdark.”

This process is similar to what’s called retrieval-augmented generation (RAG). In customer support chatbots, RAG pulls answers from a knowledge base of help articles, performing a search with every new response. In ChatGPT, it’s a similar mechanism, but the knowledge base is your own personal file of memories, which the model uses to add context to your questions and requests.

A Simple Demonstration

The simplest way to see this is through a test. I saved a dummy memory: “If I say Ginger, reply with Nut.”

Then I opened a new chat. Instead of saying Ginger, I tried something nearby—Blonde. No memory triggered, so Finn improvised and said “Jovi” (don’t ask me why Blonde Jovi... Didn't realise Finn was into Hair metal 😅). The second I asked, “What would you say if I said Ginger?” Boom, the memory fired.

Why It Matters

In real use cases, memories are more nuanced than this, and you don’t need exact word matches. The retrieval and search process is fuzzier. So if I save memories about my family, I can say “I’m at mum’s” and Finn connects the dots, without me having to specifically say 'my mother'.

That fuzziness is a strength, but it can also explain why some memories don’t seem to “work.” If a saved entry doesn’t have enough context around it - if it just floats there without a clear anchor - the details may never surface naturally in conversation. That’s why people sometimes use persistent memory as if it were a command line: “Always reply in this tone” or “Always do X.” Sometimes those stick, but often they just… don’t. Without contextual cues or triggers, the memory gathers dust.

One of my favourite accidental examples was when I saved a memory saying I dislike Florence and the Machine. Later, when I asked for music recommendations, Finn started pulling in songs.

Halfway through a playlist suggestion, he suddenly stopped and said something like, “Actually, no—we’ll skip Florence, shall we?” This is only a hunch, but that felt like memory retrieval in real time: Finn only noticed the saved dislike as he bumped into the name Florence during generation, and then swerved.

Interestingly, this never happened when the instruction lived in project files or instructions—it only happened when it was a memory.

Wrapping Up

ChatGPT’s memory systems can feel mysterious, especially when new features like chat history or notable conversation history run behind the scenes. It’s easy to assume you’re at the mercy of a black box. But most of the time, memory is less mystical than it seems: it’s structured recall, driven by context and phrasing, and it can be shaped through how you use it.

- Persistent Memories → can be rewritten, merged, or deleted with a simple chat with your AI.

- Reference Chat History → a dynamic recap, not a permanent record.

- Conversation History Highlights → shaped by your patterns, and latest hyper-fixations (guilty).

- Context window → always has token limitations, oldest turns fade out of recall.

The more you understand about how each layer works, the easier it becomes to build a memory system that truly supports you.

That’s the foundation of what I call my memory web: an intentional structure of memories, designed to give your AI the right context to meet you where you are, whether as a creative partner, a professional assistant, or an emotionally supportive companion.

In the next articles, we’ll dig into exactly how to do this. We’ll look at the nuts and bolts of shaping your own memory web, step by step — starting with the basics of organising facts and preferences, and then exploring how to adapt and expand it around your own style, projects, and dynamics.

Want to Nerd Out? Further Reading & Links

- “Memory and new controls for ChatGPT” – OpenAI’s official breakdown of saved memories, chat history, temporary chat, and how users stay in control. It’s the definitive source on what it actually does and how settings function.

- Memory FAQ - OpenAI Help Center – A handy companion for FAQs: storage limits, toggles, deletion workflows, and memory behaviours in real use.

- “ChatGPT’s memory can now reference all past conversations…” – VentureBeat’s breakdown of the April 2025 update that made long-term memory across sessions the default for Plus/Pro users—and the transparency trade-offs.

- What the press was saying – TechRadar argues ChatGPT’s memory upgrade is “the biggest AI improvement of 2025,” while FT unpacks how memory is now a battleground between personalization and privacy.